When more is less: mind the cacheability

I would like to share some lessons I learned years ago about optimizing the performance of web applications.

There are many guidelines and patterns in this regard. I would like to address and confront one of them: minimizing the number of database queries (or web service calls) per request. It sounds like a good heuristic – each network round-trip (e.g. to and from the DB) takes several milliseconds and impacts the overall response time. So having fewer queries is indeed the right approach for many cases.

However, I would like to highlight some situations, when better way is to do the opposite. I will use two examples from two different projects on which I worked in the past.

Story 1: Trading a single query for multiple ones

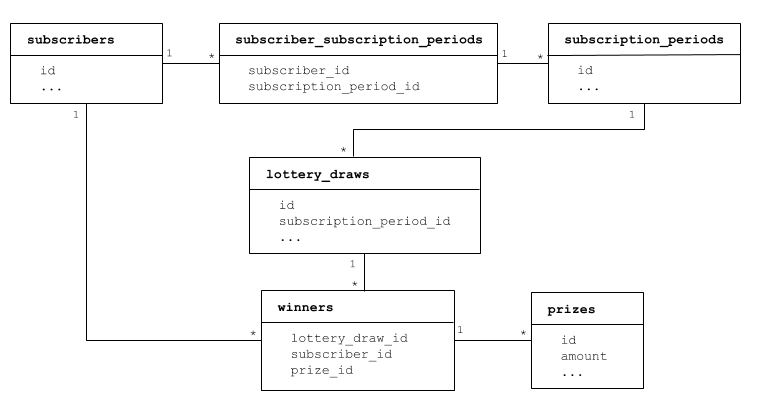

Once I was working on a system for a lottery. The domain model included the concept of Subscription Periods. Participants could buy e.g. a 6-month or 1-year subscription which enabled them to participate in all lottery draws within the corresponding Subscription Periods (one period = 1 month). Each Subscription Period had multiple Lottery Draws (at least one per week). Every Lottery Draw resulted in a list of multiple Winners with corresponding Prizes. A very simplified DB schema could have the form of:

Showing last prizes for the current user

One functionality was to present all prizes from all lottery draws for the last three subscription periods for the currently logged in user. One approach was just to query the database directly. The SQL query was similar to the one below:

select distinct lottery_draw.date, prize.amount

from subscription_periods period

inner join lottery_draws lottery_draw on lottery_draw.subscription_period_id = period.id

inner join winners winner on winner.lottery_draw_id = lottery_draw.id

inner join prizes prize on prize.winner_id = winner.id

where period.id in

(select period2.id from subscription_periods period2 order by id desc limit 3)

and winner.subscriber_id = :subscriber_id

This approach can be perfectly fine for many cases, but for that particular project, due to the hardware setup and the load distribution, there were some problems:

- it had to be executed for each user separately (subscriber ID was the parameter of the query)

- if we wanted to cache that query, then we would have a cache entry for each subscriber (and there were millions of subscribers)

- TTL-based caching was ineffective, because lottery draws were not updated for several days and then updated very frequently during the actual draw time

- testing this code required writing integration tests with a database populated with test data — the application layer just executed this query, so unit test unfortunately did not make much sense

Decomposing the query

The testability aspect lead us to an alternative solution. On the high level, the idea was to:

- Find last three subscription periods (DB query)

- Find all lottery draws in these periods (DB queries)

- Filter the lottery draws by checking if the subscriber is on the prize list (application logic)

The implementation included two types of very simple DB queries plus some filtering and aggregation, which was done in plain Java. The queries were implemented in repositories, which could have been tested separately. Filtering and aggregation logic (which was more complex than presented here) were no longer implemented in SQL, so unit-testing (with mocked repositories) was very effective.

This approach lead to an additional benefit. Even though there were more DB queries, they were exactly the same for each user. Caching was much more effective, because queries were no longer parametrized with the ID of the subscriber. Effectively the in-memory cache contained all lottery draws for the last three months. There was not so many of them, so they could have been kept in the memory most of the time.

Story 2: The ultimate lesson: know your domain!

I believe that the most valuable optimizations are strictly related to the business domain we implement. We can learn multiple generic advices and tricks, but the real value is usually in the specifics of the problem itself. Let me share a story from another project to clarify the idea.

Notification subsystem domain

That time I worked on a project for a large shipment & delivery company. In a nutshell, the system received huge load of status updates (regarding consignment processing) and was about to process them and send notifications to the customers.

Each status update had a corresponding status code assigned. Let’s not worry about what that code really meant. The important part is that each such code had to be translated into one of so-called event types.

There was a separate system responsible for the Status Code to Event Type translation. It was an existing web service that exposed two methods:

- One for translating an incoming Status Code into the corresponding Event Type

- Second for translating an Event Type into a list of all the Status Codes assigned to that type

The implementation seemed to be obvious:

- Receive a status update

- Extract the status code

- Call the first web service method to translate the status code into the corresponding event type

- Perform any further processing based on the event type (which is irrelevant for this article).

That would be the end of the story, but let me reveal more details about the data which we processed. It turned out, that we were interested in only five Event Types and any status update of a different event type would be just filtered out. As far as Status Codes, there could have been hundreds of them, as they corresponded to results of different types of physical scans etc.

Using the domain knowledge

Basing on that knowledge, the architect of the project found a very clever improvement. The new implementation included five calls to the second web service method. That is, each time we received a status update, we would call for a list of Status Codes corresponding to each of the five Event Types we were interested in. Based on the result of the calls, we could find if the incoming Status Code was on any of those lists. If it was, we knew its Event Type. If it was absent, then the status update had to be filtered out anyway, so we did not have to know its Event Type. It was enough to know that we were not interested in that type.

But how did trading one call into five calls help us? The thing is, that those mappings hardly ever changed. Using cache seemed like an obvious solution. With the first implementation though, we would call the method with many different values of the parameter (i.e. many different Status Code). It could have happened that the same Status Code was sent so rarely, that the cached result would have never been used before the cache invalidation.

With the second approach, we always sent the same five queries. The reasonable caching policy was introduced so in most of the cases the network round-trip did not occur at all.

Summary

In this article I presented two scenarios in which less-obvious approach enabled better possibilities for caching. In first one we leveraged the fact that some of the queries might be frequently asked by the same application users. In the second case, further analysis of the domain-specific knowledge resulted in a surprising implementation. Of course, using cache might not be an option for some projects, but whenever it is, it will pay off to analyze the actual implementation and make it cache-friendly first. Thank you for reading this post and I hope you will find it useful!